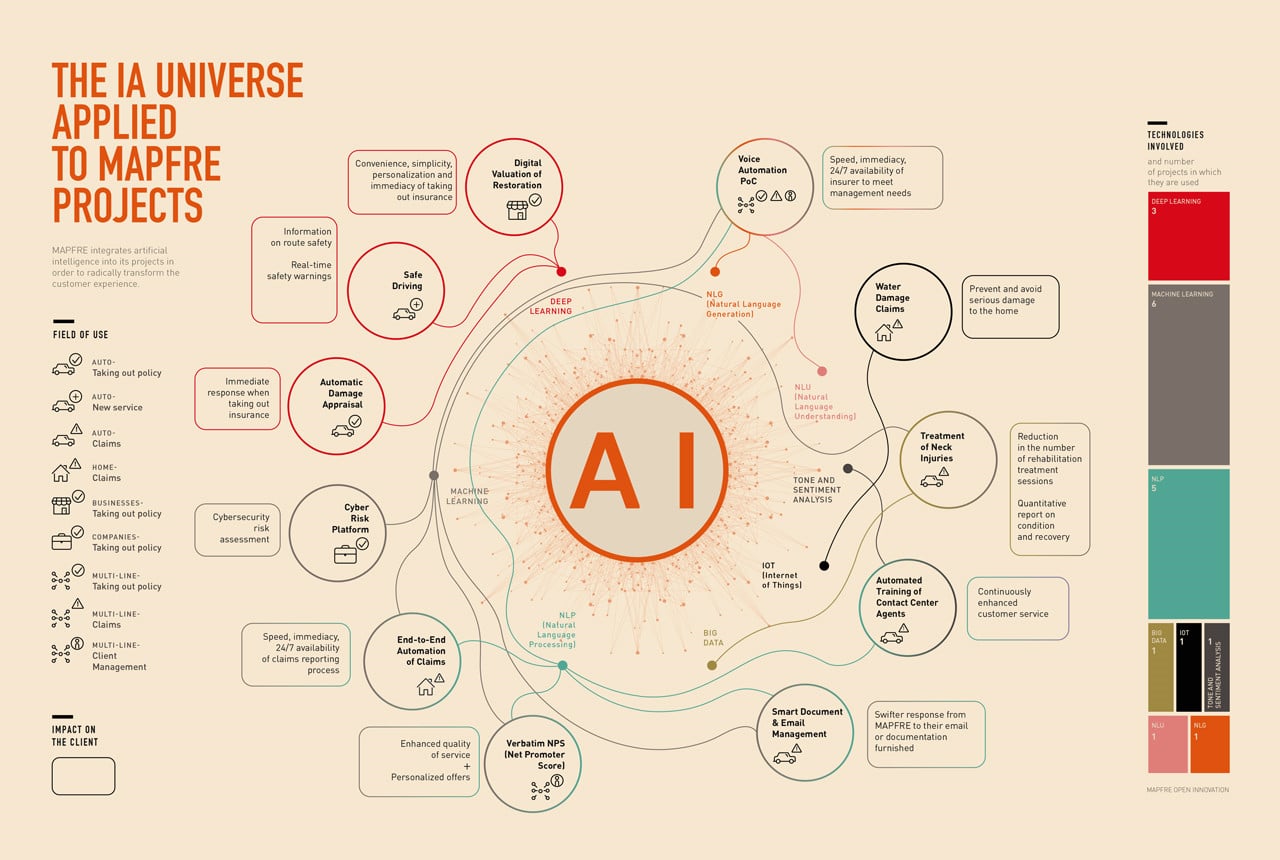

Nowadays we are not surprised to find personalized advertising when browsing the Internet, or receive recommendations for audiovisual content from streaming platforms that seem made by someone who knows us better than we know ourselves. However, the potential of artificial intelligence (AI), responsible for these situations, goes far beyond that. In the current COVID-19 pandemic, for example, it has been used to predict the number of ICU beds required, as well as in applications offering rapid disease diagnoses by means of X-ray analyses. Health, education, mobility, banking, insurance… Although this technology is at its height nowadays, its development has been ongoing for decades and it can now offer solutions in almost every aspect of our lives.

Our colleagues Mireia Rojo (an Advanced Analytics expert) and Pedro Sacristán (Artificial Intelligence Lead) underscore the way this new industrial revolution is affecting every sector, “from agriculture (where the advances it brings are expected to have an even greater impact than the introduction of machinery) through to the aforementioned field of health, where we can already see algorithms that are trained to analyze images and detect cancer with great efficacy.” Airlines that modify their fares based on real-time calculations; investors whose stock exchange purchases are guided by Internet data compiled and processed in order to be able to predict the behavior of certain securities in the market; logistics companies that optimize the delivery routes of their drivers for distributing packages… The list of fields where these techniques are applied – among which we can, of course, include insurance – is endless, according to our experts. At the start of the last decade, only one in 50 European startups was focused on this technology; nowadays the figure is almost one in every ten. It is clear that AI is – and will undoubtedly be – a leading component of many business models; however, to reap the full benefit, it is essential to fully comprehend how it works and its true potential.

Algorithms at the bottom of it all

Although we may feel it is very modern, artificial intelligence emerged in the 1950s as a branch of computer science. Specifically, the term was coined in 1956 during a meeting of experts in information theory, neural networks, computing, abstraction and creativity at the University of Dartmouth (USA). More than a technology in itself, as we will see, AI is actually a myriad of technologies that seek to enable machines to perceive, understand, act and learn. This discipline therefore strives to develop computer systems capable of performing tasks normally attributed to human intelligence, such as recognizing objects, identifying faces, driving vehicles, detecting diseases or understanding natural language, both spoken and written. There could possibly be thousands more, almost as many as the tasks we perform in our daily lives. Algorithms play a key role in all of them.

An algorithm is an ordered set of instructions, operations, steps, or processes that enable a particular task to be completed, or a solution to be found when some problem arises. We could say that it is like a list of preset instructions that guide the decisions to be made. For example, bring a vehicle to a halt at a STOP sign. Algorithms are the essence of any artificial intelligence system and are trained by providing them with as much data as possible, to act as references, so that they can learn more and progress. Have you ever accessed the photo gallery on your smartphone and seen a message asking you to confirm who the person in a picture is? That has a lot to do with what we are talking about here. In such a case, the device is asking you for help to compile more information and improve its face identification skills. By fine-tuning its classification, the next time you want to search for photos of a relative, you will simply have to type in their name and your smartphone will be able to retrieve everything associated with that person in under a second.

ARTIFICIAL INTELLIGENCE TIMELINE

1951

Creation of the first neural network: SNARC

1955

The concept of Artificial Intelligence is born

1967

The first pattern recognition model is developed: Nearest Neighbor

1974 - 1980

1985

NetTalk is invented and learns to pronounce words

1987 - 1993

1997

Deep Blue defeats the chess world champion

2006

New algorithms are developed called “Deep Neural Networks” that enable objects to be detected in pictures and videos

2010

2016

AlphaGo program defeats Go world champion

2018

Tesla launches self-driving vehicles

How does AI learn?

Depending on the capabilities that a machine can develop with respect to human intelligence, artificial intelligence is classified according to three types or levels: soft or weak AI, hard or strong AI, and superintelligence, Mireia and Pedro explain. Soft AI is what almost every company is implementing nowadays and it is designed to solve highly specific, concrete tasks. With this kind of AI, machines offer us solutions they have learned through repetitive patterns and trends, thanks to algorithms programmed by humans. This, for example, is what virtual assistants like Apple’s Siri, Amazon’s Alexa or Google Assistant employ when we are apparently able to converse with them; what they are actually doing is offer us replies to specific orders (such as “Tell me what the weather is like today”), based on the results of a search on the Internet or in its databases.

As for hard AI, it is expected to possess capabilities similar to those of human beings, able to make decisions in a proactive, deductive manner of its own volition. If this is the case, the algorithms would be able to understand, act and make decisions without waiting for orders or having to repeat the same task over and over again. To date, this type of AI only exists in the field of science fiction with examples in movies already considered classics such as A Space Odyssey (1969), Blade Runner (1982) or The Matrix (1999); or, more recently, Her (2014), Ex Machina (2015) or Upgrade (2018). Looking even further ahead, the next step would be superintelligence which, in theory, will surpass human capabilities in terms of both intelligence and skills.

As we indicated at the beginning, AI encompasses different techniques whose ultimate goal is for machines to learn from patterns extracted from data. The main technique is what is known as machine learning which, although usually confused with AI, is merely one part of it. This technique includes processes in which it is the machines themselves that create their rules (algorithms) and predictions based on the data supplied to them by humans. This is why, for example, the language translation systems facilitated by online platforms such as Google Translate have improved so much in recent years. The secret lies in the fact that, at first, it translated using syntactic rules, while now it cross-references millions of examples of actual translations found on the Web.

Within machine learning there is a more specific area or subdomain where deep neural networks are used; this is what is known as ‘deep learning’. The strength of deep learning, which has undergone tremendous development in recent times, lies in layers upon layers of data processing (neural networks) and what sets it apart from machine learning is that it is the systems themselves, with scarcely any supervision, that are able to learn and improve by themselves, based on the experience they progressively acquire.

Some applications of AI

NLP

Natural Language Processing (NLP) is an application of AI linked to linguistics that processes commands (whether written or spoken) in a natural human language, i.e. in the same way as we would communicate with another person.

COMPUTER SPEECH

This converts a human language message from one format to another, for example, from audio to text or vice versa. For example, this allows for the transcription of recordings, dictations or having the machine read out a document.

COMPUTER VISION

This enables the machine to recognize any visual information, whether static or in motion. By understanding the contents of a photograph, drawing or video, it can recognize people or identi-fy objects, and this has a multitude of applications in fields such as security, mobility, leisure, etc.

ROBOTICS

This is an AI application related to all of the above, given that a robot can be designed to move around, perform actions, or understand and produce messages according to which of the capabi-lities (or a combination of several of them) described above are applied.